{Update November 2015: Google rolled out a significant algorithm update on November 19, 2015 that had a strong connection to Phantom 2 from May 2015. Many sites that were impacted during Phantom 2 in May were also impacted on November 19 during Phantom 3. And a number of companies working to rectify problems saw recovery and partial recovery. You can learn more about Google’s Phantom 3 Update in my post covering a range of findings.}

My Google Richter Scale was insanely active the week of April 27, 2015. That’s when Google rolled out a change to its core ranking algorithm to better assess quality signals. I called the update Phantom 2, based on the mysterious nature of the update (and since Google would not confirm it at the time). It reminded me a lot of the original Phantom update, which rolled out on May 8, 2013. Both were focused on content quality and both had a big impact on websites across the web.

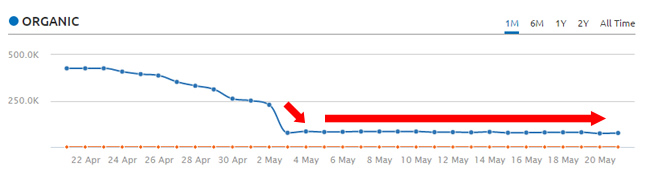

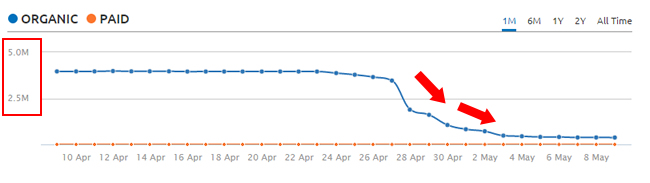

I heavily analyzed Phantom 2 soon after the update rolled out, and during my analysis, I saw many sites swing 10-20% either up or down, while also seeing some huge hits and major surges. One site I analyzed lost ~80% of its Google organic traffic overnight, while another surged by 375%. And no, I haven’t seen any crazy Phantom 2 recoveries yet (which I never thought would happen so quickly). More about that soon.

Also, based on my first post, I was interviewed by CNBC about the update. Between my original post and the CNBC article, the response across the web was amazing. The CNBC article has now been shared over 4,500 times, which confirms the level of impact Phantom had across the web. Just like Phantom 1 in 2013, Phantom 2 was significant.

Phantom and Panda Overlap

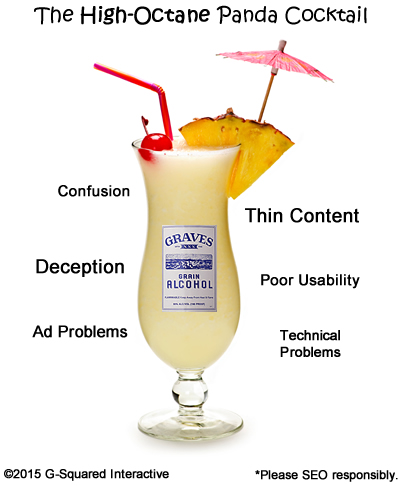

While analyzing the impact of Phantom 2, it wasn’t long before I could clearly see that the update heavily targeted content quality. I was coming across serious low quality content, user engagement problems, advertising issues, etc. Many (including myself) initially thought it was a Panda update based on seeing the heavy targeting of content quality problems.

And that made sense timing-wise, since the last Panda update was over seven months ago (10/24/14). Many have been eagerly awaiting an update or refresh since they have been working heavily to fix any Panda-related problems. It’s not cool that we’ve gone over seven months when Panda used to roll out frequently (usually about once per month).

But we have good news out of SMX Advanced. Gary Illyes explained during his AMA with Danny Sullivan that the next Panda refresh would be within the next 2-4 weeks. That’s excellent news for many webmasters that have been working hard on Panda remediation. So definitely follow me on Twitter since I’ll be covering the next Panda refresh/update in great detail. Now back to Phantom.

After Phantom rolled out, Google denied Panda (and Penguin) and simply said this was a “normal update”. And then a few weeks later, they explained a little more about our ghostly friend. Gary Illyes said it was a change to Google’s core ranking algorithm with how it assesses “quality signals”. Ah, that made a lot of sense based on what I was seeing…

So, it wasn’t Panda, but focuses on quality. And as I’ve said many times before, “content quality” can mean several things. You have thin content, low quality affiliate content, poor user experience, advertising obstacles, scraped content, and more. Almost every problem I came across during my Phantom analysis would have been something I would have targeted from a Panda standpoint. So both Phantom and Panda seem to chew on similar problems. More about this soon.

Phantom Recovery and A Long-Term Approach

So, Panda targets low quality content and can drag an entire site down in the search results (it’s a domain-level demotion). And now we have Phantom, which changed how Google’s core ranking algorithm assesses “quality signals”. The change can boost urls with higher quality content (which of course means urls with lower quality content can drop).

It’s not necessarily a filter like Panda, but can act the same way for low quality content on your site. Google says it’s a page-level algorithm, so it presumably won’t drag an entire domain down. The jury is still out on that… In other words, if you have a lot of low quality content, it can sure feel like a domain-level demotion. For example, I’ve seen some websites get obliterated by the Phantom update, losing 70%+ of their traffic starting the week of April 27th. Go tell the owner of that website that Phantom isn’t domain-level. :)

URL Tinkering – Bad Idea

So, since Phantom is page-level, many started thinking they could tinker with a page and recover the next time Google crawled the url. I never thought that could happen, and I still don’t. I believe it’s way more complex than that… In addition, that approach could lead to a lot of spinning wheels SEO-wise. Imagine webmasters tinkering endlessly with a url trying to bounce back in the search results. That approach can drive most webmasters to the brink of insanity.

I believe it’s a complex algorithm that also takes other factors into account (like user engagement and some domain-level aspects). For example, there’s a reason that some sites can post new content, have that content crawled and indexed in minutes, and even start ranking for competitive keywords quickly. That’s because they have built up trust in Google’s eyes. And that’s not page-level… it’s domain-level. And from an engagement standpoint, Google cannot remeasure engagement quickly. It needs time, users hitting the page, understanding dwell time, etc. before it can make a decision about recovery.

It’s not binary like the mobile-friendly algorithm. Phantom is more complex than that (in my opinion).

John Mueller’s Phantom Advice – Take a Long-Term Approach To Increasing Quality

Back to “url tinkering” for a minute. Back when Phantom 1 hit in May of 2013, I helped a number of companies deal with the aftermath. Some had lost 60%+ of their Google organic traffic overnight. My approach was very similar to Panda remediation. I heavily analyzed the site from a content quality standpoint and then produced a serious remediation plan for tackling those problems.

I didn’t take a short-term approach, I didn’t believe it was page-level, and I made sure any changes clients would implement would be the best changes for the long-term success of the site. And that worked. A number of those companies recovered from Phantom within a six month period, with some recovering within four months. I am taking the same approach with Phantom 2.

And Google’s John Mueller feels the same way. He was asked about Phantom in a recent webmaster hangout video and explained a few key points. First, he said there is no magic bullet for recovery from a core ranking change like Phantom. He also said to focus on increasing the quality on your site over the long-term. And that one sentence has two key points. John used “site” and not “page”. And he also said “long-term”.

So, if you are tinkering with urls, fixing minor tech issues on a page, etc., then you might drive yourself insane trying to recover from Phantom. Instead, I would heavily tackle major content quality and engagement problems on the site. Make big improvements content-wise, improve the user experience on the site, decrease aggressive advertising tactics, etc. That’s how you can exorcise Phantom.

More Phantom Findings

Over the past month, I’ve had the opportunity to dig into a number of Phantom hits. Those hits range from moderate drops to severe drops. And I’ve analyzed some sites that absolutely surged after the 4/29 update. I already shared several findings from a content quality standpoint in my first post about Phantom 2, but I wanted to share more now that I have additional data.

Just like with Panda, “low quality content” can mean several things. There is never just one type of quality problem on a website. It’s usually a combination of problems that yields a drop. Here are just a few more problems I have come across while analyzing sites negatively impacted by Phantom 2. Note, this is not a full list, but just additional examples of what “low quality content” can look like.

Thin Content and Technical Problems = Ghostly Impact

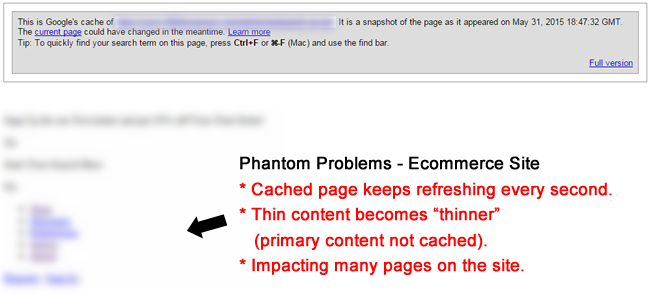

An ecommerce site that was heavily impacted by Phantom reached out to me for help. Once I dug into the site, the content quality problems were clear. First, the site had a boatload of thin content. Pages were extremely visual with no supporting content. The only additional content was triggered via a tab (and there wasn’t much added to the page once triggered). The site has about 25-30K pages indexed.

The site also used infinite scroll to display the category content. If set up properly SEO-wise, this isn’t a huge deal (although I typically recommend not to use infinite scroll). But this setup had problems. When I dug into Google’s cache to see how the page looked, the page would continually refresh every second or so. Clearly there was some type of issue with how the pages were coded. In addition, when checking the text-only version, the little content that was provided on the page wasn’t even showing up. Thin content became even thinner…

So, you had extremely thin content that was being cut down to no content due to how the site was coded. And this problem was present across many urls on the site.

Directory With Search Results Indexed + Followed Links

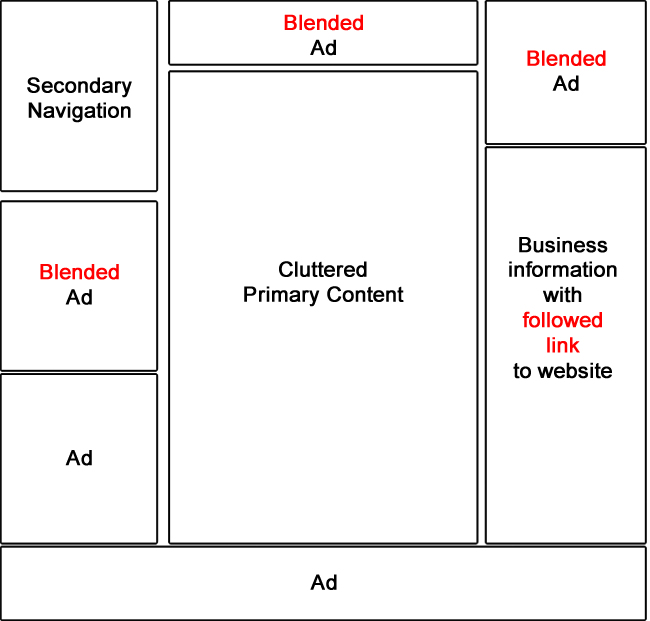

Another Phantom hit involved a large directory and forum. There are hundreds of thousands of pages indexed and many traditional directory and forum problems are present. For example, the directory listings were thin, there was a serious advertising issue across those pages (including the heavy blending of ads with content), and search results indexed.

In addition, and this was a big problem, all of the directory links were followed. Since those links are added by business owners, and aren’t natural, they should absolutely be nofollowed. I estimate that there are ~80-90K listings in the directory, and all have followed links to external websites that have set up the listings. Not good.

An example of a low quality directory page with followed links to businesses:

Rogue Low Quality Content Not Removed During Initial Panda Work

One business owner reached out to me that had been heavily impacted by Panda and Penguin in the past. But they worked hard to revamp the site, fix content quality problems, etc. They ended up recovering (and surging ) during Panda 4.1. They were negatively impacted by Phantom (not a huge drop, but about 10%).

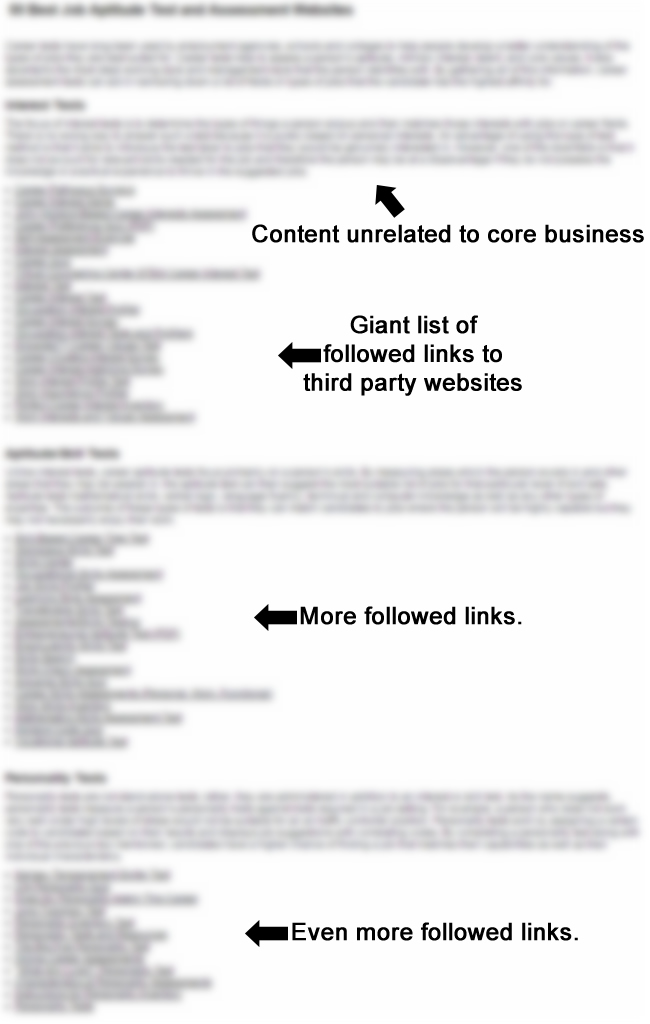

Quickly checking the site after Phantom 2 rolled out revealed some strange legacy problems (from the pre-recovery days). For example, I found some horrible low quality content that contained followed links to a number of third party websites. The page was part of a strategy employed by a previous SEO company (years ago). It’s a great example of rogue low quality content that can sit on a site and cause problems down the line.

Note, I’m not saying that one piece of content is going to cause massive problems, but it surely doesn’t help. Beyond that, there were still usability problems on the site, mobile problems, pockets of thin content, and unnatural-looking exact match anchor text links weaved into certain pages. All of this together could be causing Phantom to haunt the site.

No, Phantoms Don’t Eat Tracking Codes

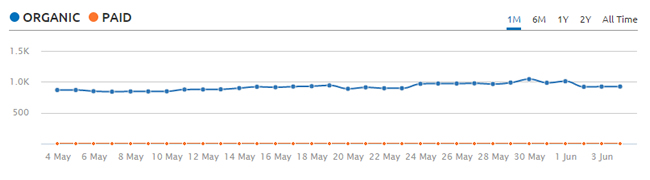

I’ll be quick with this last one, but I think it’s important to highlight. I received an email from a business owner that claimed their site was hit by 90%+ after Phantom rolled out. That’s a huge Phantom hit, so I was eager to review the situation.

Quickly checking third party tools revealed no drop at all. All keywords that were leading to the site pre-Phantom 2 were still ranking well. Trending was strong as well. And checking the site didn’t reveal any crazy content quality problems either. Very interesting…

So I reached out to the business owner and asked if it was just Google organic traffic that dropped, or if they were seeing drops across all traffic sources. I explained I wasn’t seeing any drops via third party tools, I wasn’t seeing any crazy content quality problems, etc. The business owner quickly got back to me and said it was a tracking code issue! Google Analytics wasn’t firing, so it looked like there was a serious drop in traffic. Bullet avoided.

Important note: When you believe you’ve been hit by an algo update, don’t simply rely on Google Analytics. Check Google Search Console (formerly Google Webmaster Tools), third party tools, etc. Make sure it’s not a tracking code issue before you freak out.

Quality Boost – URLs That Jumped

Since Google explained that Phantom 2 is actually a change to its core ranking algorithm with how it assesses quality, it’s important to understand where pages fall flat, as well as where other pages excel. For example, some pages will score higher, while others will score lower. I’ve spent a lot of time analyzing the problems Phantom victims have content quality-wise, but I also wanted to dig into the urls that jumped ahead rankings-wise. My goal was to see if the Quality Update truly surfaced higher quality pages based on the query at hand.

Note, I plan to write more about this soon, and will not cover it extensively in this post. But I did think it was important to start looking at the urls that surged in greater detail. I began by analyzing a number of queries where Phantom victim urls dropped in rankings. Then I dug into which urls used to rank on page one and two, and which ones jumped up the rankings.

A Mixed Bag of Quality

Depending on the query, there were times urls did jump up that were higher quality, covered the subject in greater detail, and provided an overall better user experience. For example, a search for an in-depth resource for a specific subject yielded some new urls in the top ten that provided a rich amount of information, organized well, without any barriers from a user engagement standpoint. It was a good example of higher quality content overtaking lower quality, thinner content.

Questions and Forums

For some queries I analyzed that were questions, Google seemed to be providing forum urls that contained strong responses from people that understood the subject matter well. I’m not saying all forums shot up the rankings, but I analyzed a number of queries that yielded high quality forum urls (with a lot of good answers from knowledgeable people). And that’s interesting since many forums have had a hard time with Panda over the years.

Navigational Queries and Relevant Information

I saw some navigational queries yield urls that provided more thorough information than just providing profile data. For example, I saw some queries where local directories all dropped to page two and beyond, while urls containing richer content surface on page one.

Local Example

From a pure local standpoint (someone searching for a local business), I saw some ultra-thin local listings drop, while other listings with richer information increase in rank. For example, pages with just a thumbnail and business name dropped, while other local listings with store locations, images, company background information, hours, reviews, etc. hit page one. Note, these examples do not represent the entire category… They are simply examples based on Phantom victims I am helping now.

In Some Cases, The “Lower Quality Update”?

The examples listed above show higher quality urls rising in the ranks, but that wasn’t always the case. I came across several queries where some of the top listings yielded lower quality pages. They did not cover the subject matter in detail, had popups immediately on load, the pages weren’t organized particularly well, etc.

Now, every algorithm will contain problems, yield some inconsistent results, etc., but I just found it ironic that the “quality update” sometimes surfaced lower quality urls on page one. Again, I plan to dig deeper into the “quality boost” from Phantom 2 in future posts, so stay tuned.

Next Steps with Phantom:

As mentioned earlier, I recommend taking a long-term approach to Phantom remediation. You need to identify and then fix problems riddling your sites from a content quality and engagement standpoint. Don’t tinker with urls. Fix big problems. And if Phantom 2 is similar to Phantom 1 from 2013, then that’s exactly what you need to focus on.

Here is what I recommend:

- Have a thorough audit conducted through a quality lens. This is not necessarily a full-blown SEO audit. Instead, it’s an audit focused on identifying content quality problems, engagement issues, and other pieces of bamboo that both Panda and Phantom like to chew on.

- Take the remediation plan and run with it. Don’t put band-aids on your website, and don’t implement 20% of the changes. Fix as many quality problems as you can, and as quickly as you can. Not only will Google need to recrawl all of the changes, but I’m confident that it will need to remeasure user engagement (similar to Panda). This is one reason I don’t think you can bounce back immediately from a Phantom hit.

- Have humans provide feedback. I’ve brought this up before in previous Panda posts, and it can absolutely help with Phantom too. Ask unbiased users to complete an action (or set of actions) on your site and let them loose. Have them answer questions about their visit, obstacles they came across, things they hated, things they loved, etc. You might be surprised by what they say. And don’t forget about mobile… have them go through the site on their mobile phones too.

- Continue producing high quality content on your site. Do not stop the process of publishing killer content that can help build links, social shares, brand mentions, etc. Remember that “quality” can be represented in several ways. Similar to Panda victims, you must keep driving forward like you aren’t being impacted.

- What’s good for Phantom should be good for Panda. Since there seems to be heavy overlap between Phantom and Panda, many of the changes you implement should help you on both levels.

Summary – Exorcise Phantom and Exile Panda

If you take one point away from this post, I hope you maintain a long-term view of increasing quality on your website. If you’ve been hit by Phantom, then don’t tinker with urls and expect immediately recovery. Instead, thoroughly audit your site from a quality standpoint, make serious changes to your content, enhance the user experience, and set realistic expectations with regard to recovery. That’s how you can exorcise Phantom and exile Panda.

Now go ahead and get started. Phantoms won’t show themselves the door. You need to open it for them. :)

GG